Methodology

The Main Question

“How difficult is it for an attacker to find a new exploit for this software?”

Attackers have limited resources, and just like anyone else, they don't like to waste their time doing something the hard way if an easier path is available. They have tricks and heuristics they use to assess the relative difficulty to exploit software, so that they can focus on the easy targets and low hanging fruit. Mudge has had a long career of doing just that (legally, and for research purposes), so he's developed his own personal toolkit of measurements to take when assessing software risk. By consulting with other luminaries of the security field who have extensive exploit development experience, we've been able to build up the list of static analysis metrics and features which we currently assess.

Scoring

Our ratings are based on a combination of measured data and expert analysis. The primary factors under consideration are safety features and function hygiene, with an emphasis on exploit mitigation and risk reduction.

Our current score weightings heavily emphasize safety features and function hygiene. The safety features in particular are the easiest issues to fix, and are the most fundamental. When the software industry has gotten to a point where these are included as a matter of course, we will adjust our score weightings to place a greater emphasis on more subtle elements like complexity. The way we think of it is, if a house’s front door is unlocked, we’re not going to worry too much about getting shatter sensors installed in the windows. Vendors need to get the basics in place first, and then we’ll move our focus to the next thing which can move the overall quality of software forward. If you happen to have those basics in place in your own products, however, and want to know what we’ll be focusing on in the future, look to the complexity and dynamic testing measurements.

Score Weighting

For Version 1 of our scoring system, we are focusing on Safety Features and Code Hygiene as dominant factors in our risk analysis rating. In the future we hope to factor in both Code Complexity scores and Crash Testing results.

Static Analysis

There are three main categories of static analysis features that we look at for a software binary:

Scoring: A binary score is penalized by -20 for each missing feature below.

| Feature | OS | Why It's Important |

|---|---|---|

| Address Space Layout Randomization (ASLR) | All | Attackers will try to piece existing computer code together to make the system do what they want, like using words out of different magazines to write a ransom note. If each application's code is loaded at a predictable location, this is pretty easy to do, so modern operating systems randomize the locations of code segments. To follow our metaphor, if the attacker doesn't know which words are where, they can't write the message they want to write in their note. |

| Data Execution Prevention (DEP) | All | DEP tries to make sure attacker introduced data is not treated as executable instructions by the computer. Some operating systems have separate application armoring settings for Stack DEP and Heap DEP, while others pretext both with a single setting. The stack and the heap are the two locations where data is stored by the application. |

| Control Flow Integrity (CFI) | Windows | Control Flow Integrity (CFI) refers to any safety features that try to prevent attackers from redirecting the path of code execution. Many attacks involve hijacking software execution, so that the attacker can direct the computer to a different code path than originally intended. Sometimes this is to get their own code executed, and other times they're trying to cobble together a new effect from snippets of existing code, sort of like someone writing a ransom note by cutting out words from a magazine. |

| Stack Guards | All | Buffer overflows, one of the most commonly exploited vulnerability types today, involve an attacker overwriting values on the stack so that they can change what code is being executed. Stack guards or canaries try to detect manipulations of the stack so that they can detect these styles of attacks in progress and prevent their success. |

| Fortification | MacOS, Linux | When source fortification is enabled, the compiler replaces some risky functions with safer ones. The compiler can only do this if it can determine what all the parameters would be, so not all instances of a function get fortified, even when this feature is enabled. You can think of this like spell check and autocorrect - if it isn't clear what you meant to type, things get left as you originally typed them. |

| Relocate Read-Only (RELRO) | Linux | RELRO stands for Relocate Read-Only, and is a mitigation of a Linux-specific vulnerability. Certain parts of the binary that are particularly sensitive need to be made read-only after they are built, but before the program starts running, so that an attacker can't use them to hijack operations. |

| Safe Structured Exception Handling (SEH) | Windows | SEH stands for Structured Exception Handler. When an error occurs during the software's execution, the SEH gives the software its instructions on how to handle that error. Safe SEH makes sure that an attacker can't introduce changes to the SEH pointers and code. |

| 64-bit | All | Many safety features are more effective for 64 bit binaries, or are only available in their strongest form for 64 bit binaries, so we treat this as a separate safety feature. |

| Function Ratings | Scoring | What Makes a Fuction this Type | Sample of Functions Checked For |

|---|---|---|---|

| Good Functions | +10if good functions are present in the binary | These are much safer replacements for historically bad and risky functions. For example, “strlcpy” is the good version of strncpy and strcpy, which are in the risky and bad categories, respectively. Use of these functions is relatively rare, but indicates that the developers prioritize secure coding. |

|

| Risky Functions | -5if risky functions are present in the binary | These are slightly safer functions than the bad functions, sometimes ones which were originally introduced specifically to fix flaws in those bad functions, but which are still tricky to use without introducing vulnerable bugs into code. More security-savvy programmers will generally use the “good” versions of these functions instead. |

|

| Very Risky Functions | -15if very risky functions are present in the binary | These are functions which are difficult to use without introducing vulnerabilities, and/or which have been known to introduce buffer overflows or other vulnerable bugs into software. They can be used safely, but often aren’t, and there are safer alternatives available, so their use can be indicative of a lack of security awareness. |

|

| Extremely Risky Functions | -25if extremely risky functions are present in the binary | These are the rare functions that have no place in commercial software, due to their extreme insecurity. Their use is fairly rare, but a big red flag. |

|

Crash Testing

The most commonly accepted method of testing software today is crash testing, or fuzzing. Software is given malformed inputs, to see if and how it crashes. You can learn a lot about how something is built from how it breaks, and fuzzing provides a lot of insight into how robust or potentially exploitable software is.

Data Sources

Most of the software we test is obtained the same way any consumer would get it – by buying it. This ensures that the software we test is the same as the software that end users have access to. We also get some software through publicly available sources, in the case of open source products, or where IoT firmware is available for download. Some IoT software we extract directly off of the IoT products, and in other cases we obtain software through partnerships with other non-profit and research entities. We never get software to test through private relationships with the vendors whose products we’re evaluating.

Analysis Tools

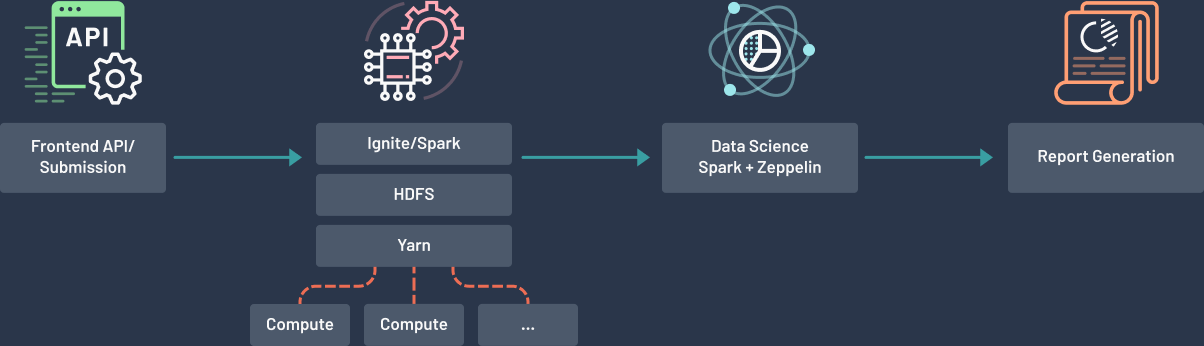

Our security evaluations are performed using a combination of proprietary and publicly-available software analysis tools, employing both static and dynamic methods of analysis. These tools are used to examine the features described elsewhere on this page: code hygiene, build safety features, and crash testing.

The publicly-available tools in our toolchest are all well-known in the software analysis community. However, in the interest of encouraging vendors to go above-and-beyond the bare minimum, we do not publicly disclose what these tools are. Instead, we advise vendors to perform their own thorough evaluations of their products prior to release, using a wide variety of different analysis tools.

Though we use publicly-available tools whenever possible, certain aspects of our mission have motivated us to develop some proprietary tools as well. These tools are designed to serve our organization's peculiar needs, most notably, the ability to perform cross-platform software analysis, and the ability to collect detailed debug and tracing data for use in our scientific efforts. Analytically, these tools perform functions which can largely be found in publicly-available alternatives. They also perform functions which are relevant to our scientific efforts, but which have not yet been incorporated into our general reporting.